From Digital Maturity to Industrial Transformation

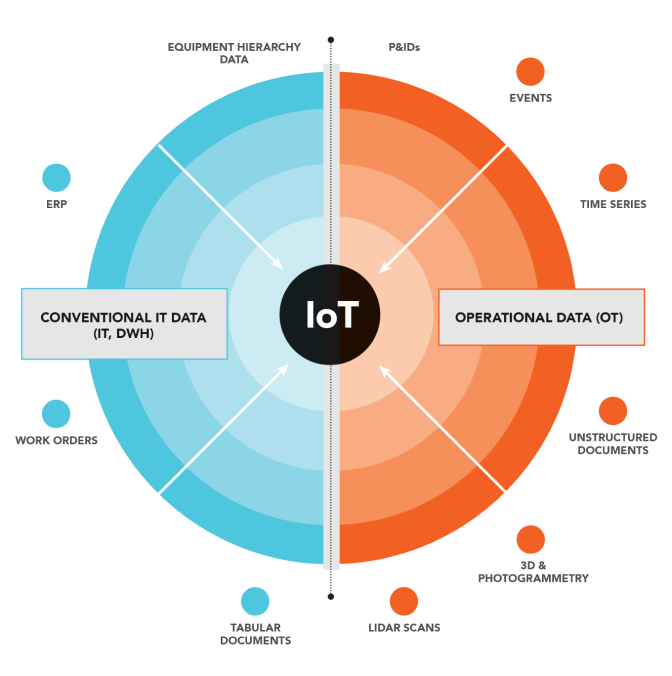

For all industrial organizations, the intelligent use of data produced by operational technology (OT) systems is central to efforts to improve operational excellence. So while everyone is talking about their digital transformation, use of data, scaling and time to value, very few in the industrial world are actually reaping the benefits.

And it isn’t data that is the challenge. OT data is the raw material that enables organizations to build more efficient, more resilient operations and improve employee productivity and customer satisfaction. This OT data is available in abundance, but industrial organizations struggle to generate value from their increasingly connected operations—with IDC showing that only one in four organizations analyzes and extracts value from data to a significant extent.

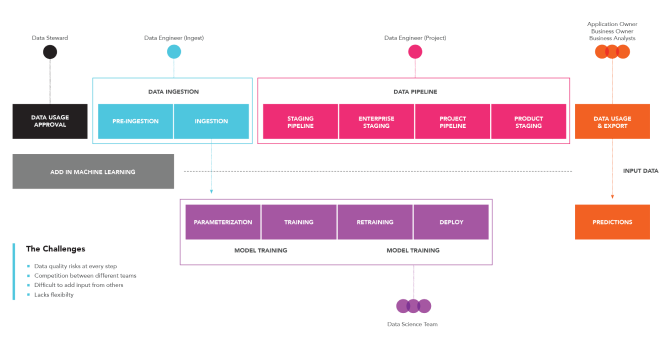

The lack of appropriate tools and processes is a significant obstacle resulting in data workers spending almost 90% of their time searching, preparing, and governing data. A fear of missing data value often led organizations to prioritize data centralization over data organization. In turn, this led to poorly thought-out “data swamps” that only perpetuate the issue of dark and uncontextualized data.

Companies that adopted machine learning (ML) to develop predictive algorithms quickly realized how critical it is to have trusted quality data and that historical data can’t always be trusted. Many organizations are also unable to address the requirements needed to achieve the data governance required to support data-driven innovation.

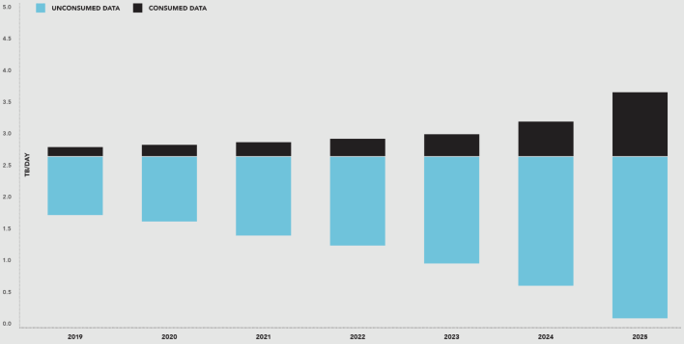

The reality is that as operational assets become more complex, connected, and intelligent—and provide more real-time information—the complexity of enabling data-driven decision-making to plan, operate, and maintain them increases. To put this in perspective, organizations across manufacturing, oil and gas, utilities, and mining expect their daily operational data throughput to grow by 16% in the next 12 months. Market intelligence provider IDC has been measuring the data generated daily by operations across these organizations’ silos and has modeled the future expansion of data and its use across industrial sectors. Even accounting for the growing digitalization of operations, IDC predicts that only about 30% of this data will be adequately utilized in 2025 (Fig. 5).